Here is another story of why I always advise DevOps Engineers to have T-Shaped skills to enhance any step in the software production!

A cross-functional team gathered during a Kaizen Event to enhance the Camunda documentation for the remarkable Camunda 8.8 release. Besides the content, I worked on some enhancements of the search functionality to provide the best experience when using the official documentation (for the end users and developers as well).

TL;DR

The main 3 enhancements are:

- Updated the DocSearch Crawler config for better search results (that's actually the most critical fix; we had an old config that led to bad search results).

- Supported custom page rank, so we can set important pages to show first (we know our docs better than the indexing algorithm!).

- Showed the page breadcrumb paths for better search navigation and usability (a small UI change but huge UX impact!).

As Camunda works in public, you can see my pull request in the Camunda Docs repo with all changes 🚀

1. Overview

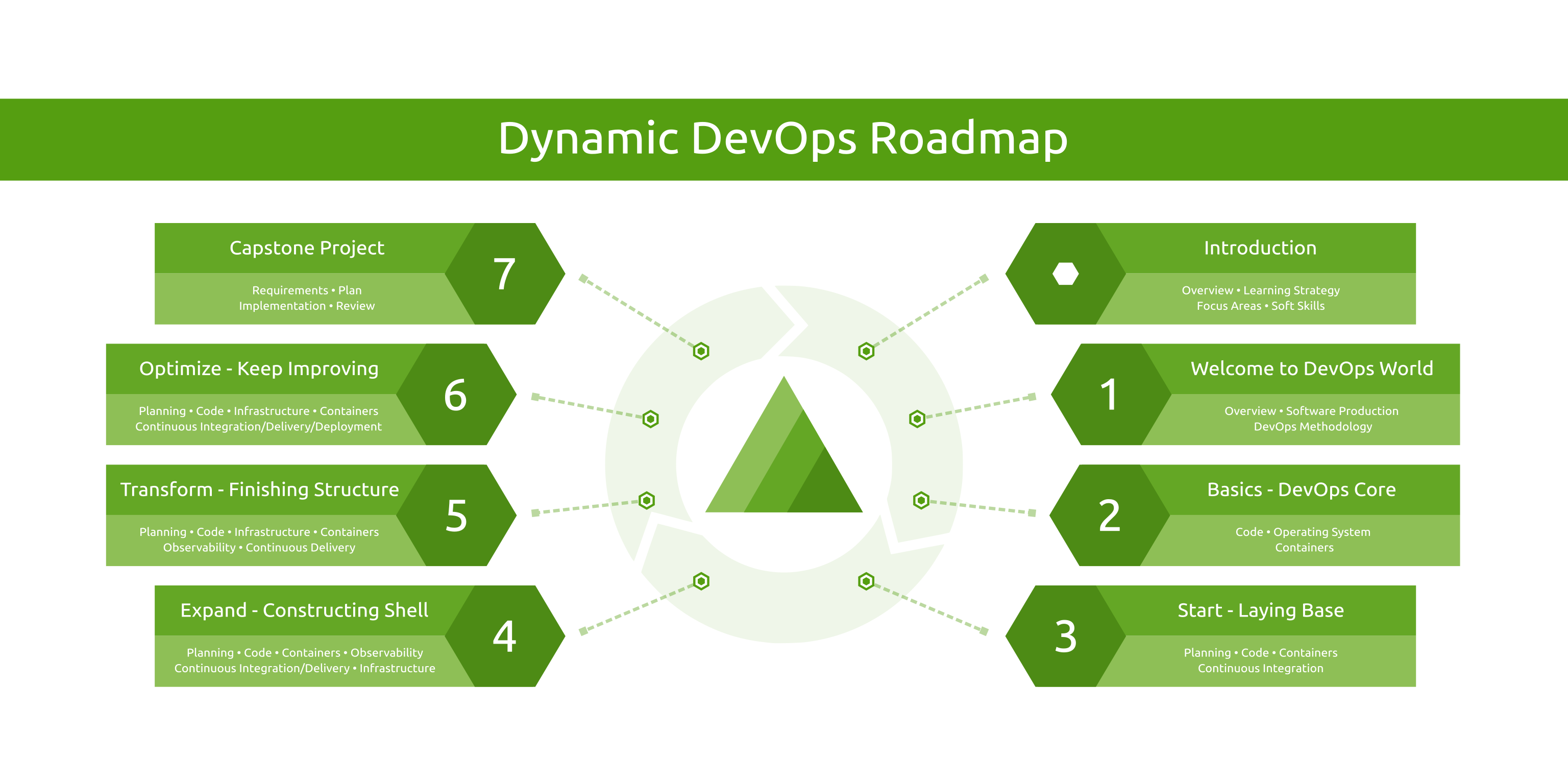

Docusaurus is one of the famous static content management systems, which helps you to build documentation websites, blogs, marketing pages, and more. It's widely used for documentation by many companies and open-source projects (I even used it in the Dynamic DevOps Roadmap).

Docusaurus search support mutliple options like Algolia DocSearch, Typesense DocSearch, Local Search, etc. Each search module has a different configuration, and your project's search results could be affected by the module's configuration.

2. The Problem

Camunda documentation utilizes Algolia DocSearch; however, at a certain point, the search returned generic results, important pages were buried, and even if you're certain the content is there, you can't find it via the search.

3. The Solution

To get quick and solid results, I've narrowed down scope the focus area to 3 pain points:

- How to show better search matching.

- How to show important pages first, regardless of the indexing algorithm.

- How to show the page path in the search dialog (because many pages could have the same title but under different sections).

3.1 DocSearch Crawler Configuration

As mentioned, the search matching was poor, but it worked well at a certain point in the past. That's a typical signal of an upgrade issue.

And, as usual, always read the documentation! Directly I've found that the DocSearch crawler configuration has some issues and it doesn't match the DocSearch official recommendations for Docusaurus v3.

That fixed most of the bad search matching because the index created by the crawler was misconfigured.

3.2 Custom Page Rank

We know our product better than any indexing algorithm, so for some pages, we know they are more critical than others and should appear first for certain keywords.

For that, I introduced a method to set the page rank from the pages Front matter which requires 2 changes.

First, a change in the index template src/theme/DocItem/Metadata/index.tsx to parse the front matter and add it as metadata:

// TypeScript Execute

// Get the page rank from front matter, defaulting to 0 if not set.

// Higher page rank means higher priority in search results.

// This is parsed by Algolia's crawler to prioritize search results.

const pageRank = currentDoc.frontMatter.page_rank || 0;

return (

<>

<Metadata {...props} />

<Head>

<meta name="docsearch:page_rank" content={pageRank} />

</Head>

</>

);

Second, updated the DocSearch Crawler configuration to use that metadata in the indexing:

// JavaScript

new Crawler({

// [...]

actions: [

{

// [...]

recordExtractor: ({ $, helpers, url }) => {

// Page rank.

// Use the page rank from the Docusaurus frontmatter if available, if not

// calculate it based on the URL depth.

// Extracting the page rank from a meta tag (it's set by the Docusaurus pages frontmatter).

const pageRank = $("meta[name='docsearch:page_rank']").attr("content");

// Set default page rank based on the number of slashes (ignore trailing slash).

// Set pageRank as inverse of depth, fewer slashes = higher rank.

const path = new URL(url).pathname.replace(/\/$/, "");

const depth = path.split("/").filter(Boolean).length;

const maxDepth = 12; // Depth cap.

const defaultPageRank = Math.max(0, maxDepth - depth);

return helpers.docsearch({

recordProps: {

pageRank: pageRank || defaultPageRank,

// [...]

},

});

},

},

],

);

Now, on any page, if the rank is not set, it will rely on the page depth; otherwise, the team can set the page rank for the important pages in the front matter like this:

<!-- Markdown --> --- sidebar_label: Kubernetes with Helm title: Camunda Helm chart page_rank: 80 --- The page content goes here...

3.3 Page Breadcrumb Path

By default, the DocSearch official recommended template for Docusaurus v3 doesn't display the page hierarchy in the search window.

The issue arises from the fact that many projects are hierarchical, and the same page title could be listed under different sections (e.g., for setting up a specific task using Helm or AWS EC2 instances, the first is categorized under Kubernetes and the second under Amazon as a cloud provider).

For a better navigation and usability, I included the page path in the Algolia search index. The idea is simple; it requires 2 changes.

First, ensure the breadcrumbs config is enabled (it's enabled by default).

Second, include the page breadcrumb path in the level 0 in the DocSearch Crawler configuration so it shows in the search:

// JavaScript

new Crawler({

// [...]

actions: [

{

// [...]

recordExtractor: ({ $, helpers, url }) => {

// Extracting the breadcrumb titles for better accessibility.

const navbarTitle = $(".navbar__item.navbar__link--active").text();

const pageBreadcrumbTitles = $(".breadcrumbs__link")

.toArray()

.map((item) => $(item).text().trim())

.filter(Boolean);

const lvl0 = [navbarTitle, ...pageBreadcrumbTitles].join(" / ") || "Documentation";

return helpers.docsearch({

recordProps: {

lvl0: {

selectors: "",

defaultValue: lvl0,

},

// [...]

},

});

},

},

],

);

And the result is that the page path shows in the search window (TBH, this should be the default! So I've created a pull request to include it in the DocSearch repo):

4. Conclusion

As a DevOps Engineer, your focus should always be on the end-to-end software production process, with a customer-centric approach, not just a part of the process. For that reason, you should possess T-Shaped skills that enable you to handle any case and improve the UX on all levels.

I already discussed why your DevOps learning roadmap is broken and what to do about it.

Happy DevOps-ing :-)