Last year, I had started a new position as SRE and it's been an exciting journey so far!

As part of the SRE team and cross-functional squads working in an Agile environment to build DevOps culture and make sure the platform is always reliable and scalable.

When I joined the company, as someone who worked with monitoring and alerting from my day-1 in this career, I saw a lot of "Alert Fatigue", and it was clear that handling alerts and incidents have room for improvement.

ToC

Situation

- No tracking of the incidents and downtime! That means the same issues handled in an ad-hoc manner and could be fixed again and again!

- Every month, there were several failures and at least a global downtime between 10-30 min!

- There was a squad for on-call rotation called "platform", which contains backend and infrastructure engineers. However, there was no separation between application alerts and infrastructure alerts. There was only one flow for all alerts. So all alerts go to backend engineer as a first level, then escalated to SRE as a second level (which means BE engineer could get alerts unrelated to them).

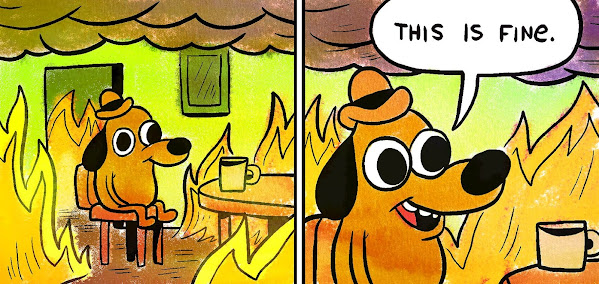

- The SRE team was overwhelmed handling the alerts, and the backend engineer had a hard time looking into the issues because of alerts noise!

- Many important alerts didn't go to the on-call route at all, and some other alerts just acked and stay forever.

- The alerts didn't have many details and didn't explain why those alerts are created that way. They also are not well-formatted and not well-informative.

- There was no single place to view all alerts from all clusters. There are multi clusters, and each one has its own AlertManager and even multi Prometheus per cluster. And all of them send to the Slack channel, which became just a stream of noise.

- A lot of noise in the Slack alerting channel, and there was no way to see what's going on unless you know what each alert about exactly.

- Using the wrong pricing plan in PagerDuty, we were paying extra thousand dollars for a plan we don't need and features we don't use at all! Simply, PagerDuty was just used as a router for no-call notifications/calls!

Results

Before looking at how all those issues have been fixed, let's actually start with the results first!

By applying SRE and DevOps practices, the teams were able to be more proactive rather than reactive. That means less time dealing with issues and more time to do what really matters.

In numbers:

- On-call direct running costs reduced by 75%!

- On-call incidents reduced by 67%!

- For 4 months in a row (from September to December 2019), there was zero global downtime! (it used to happen at least once monthly)

- For 2 months in a row (November and December 2019), there was zero on-call incidents! (it used to happen 4-5 times monthly)

Other enhancements:

- Better overview of the alerts across all clusters.

- Setup proper routes and escalation policies so each participant in the on-call gets the right alert (so no need to wake up at 3 AM just to route an alert!).

- Enhance productivity by reducing context switch. By setup a weekly schedule where an engineer handles alerts during working hours and keep the rest of the team more productive.

- Backend developers are more aware of the architecture and participating in managing the infrastructure via infrastructure-as-code.

Actions and steps

Without further ado, let's have a deep dive into details.

1. Observation and taking notes

Basically, for me, this is always my first step in any new job. In the first 3 months, I just learn about the company, the team, how things work, get along with the environment, and take notes.

Usually, after 2 months, I have enough notes, so I start to put a high-level plan from my prescriptive to discuss it with the team to answer some questions and validate some pain points.

It worth mentioning that I usually write down all my concerns I have, but in the 3rd of 4th month and after clarifying and prioritize my notes, the final list is much smaller.

2. Change management

Now about 3-4 months have been passed, and it's clear what is needed to be changed. But as you know, change is a risk! So it should be well planned, and the essential aspect of this plan is "people". When people are aware of "what's next", they are more likely to adopt the change at an earlier stage and the results are pretty good.

During change mostly there is a temporary drop in an element related to the change (e.g. productivity, stability, velocity), then it steeply rises again even better than the starting point.

I've already discussed that in this post: DevOps, J-Curve, and change management.

3. DevOps transformation

Frankly, the old model of splitting ops from devs doesn't work anymore! Also, calling the team SRE and do exactly the old sysadmin style doesn't help at all! IMO, to have a proper SRE, you need first a sold ground which is the DevOps mindset.

As you know, DevOps doesn't happen overnight; thus, it's better to start working on that early by collecting feedback from other teams and understand their pain points and how to make their life easier.

I always start with the quick-wins, by looking for the most relevant people to SRE even they are not technically part of the SRE team like backend engineers. Also, by finding excited people about the DevOps approaches.

This step needs time, but for the same reason, it's better to start it early and it really pays off.

More details about DevOps are the following posts:

4. Incident management

Well, IMO, this step is one of the most critical steps, especially if you are working with client-facing services. Fixing issues constantly without tracking them could lead to fix the same issue again and again!

In the first 3 months, it was clear that the SRE team was fixing the same issus every month! So I've started an incident report page in the wiki to track down incidents. And to make it an easy start, I just started with on-call incidents that usually have downtime.

In fact, this step led to reduce on-call incidents by 67%! That's because as mentioned before, the tracking was missing, so it was hard to see the full picture. By writing informative incident reports, doing post-mortems, and monthly review for all incidents, we found that the root causes of those 67% incidents were just 3 problems! Once fixed, the system is much much stable.

You don't need to go wild, just keep the incident report simple, clear, and customize it according to your environment. The internet is full of incident reports posts and templates, but my favorite one is from RubyGarage: Incident Report Writing: What to Do If Things Go Off the Rails in Production.

5. Alert management platform

Another issue was the alert management platform. Prometheus' AlertManger was used per Kubernetes cluster. Non-on-call alerts were forwarded to Slack and on-call alerts were sent to PagerDuty, then PagerDuty sent them to Slack also! As a result, each alert has a different format/details according to its source. Not only that but also the alerts are scattered between many alert management systems.

Moreover, when I checked the PagerDuty plan, I've realized that we are totally on the wrong pricing plan! We neither use nor need most of what we pay for! We could downgrade the plan and it will be more than sufficient! Not only that, actually, PagerDuty is so expensive for startups and small teams. We were even on a custom old plan where we pay 60$ per month!

In the past, I've already worked with OpsGenie, VictorOps, and PagerDuty ... the best one ever is OpsGenie! Especially after Atlassian acquired it. OpsGenie has an intuitive UI, rich features, and a pretty reasonable pricing model. And I've already done that kind of migration, so it was an easy pick for me and didn't take much time.

So by migrating to OpsGenie and using it as a "Source of Truth" for all alerts it helped with:

- Better overview of alerts, so any team member can view/list any alert from any cluster at any time even for non-on-call alerts.

- Slack channel is still used to be informed with alerts, but the alerts come from OpsGenie, and because of the integration between OpsGenie and Slack, we can even list and interact with alerts from Slack.

- All alerts will have the same format like location/cluster name, alert details, tags, responsible team, etc.

- Just by migrating to OpsGenie, we saved about 60% of on-call operations costs! (well, this is just a by-product not the primary goal, but in a startup, it's always nice to save a few thousand dollars)

Afterward, I started to set up the integration, teams, routing rules, and escalation policies where all alerts (info, critical, server/on-call) to be used in the next step, the alert flow.

6. Alert flow

In one way or another, all previous steps aim to have a better alert flow. A better alert flow using multi tags, routes, and escalation policies, and so on, which means less alert noise/fatigue and more focus/productivity.

One of the issues that the platform squad has only one alert flow i.e. all alerts go to backend engineer as a first level, then escalated to SRE as a second level. One of the disks is full? The alert will go to BE then they escalate it to SRE! One of the Kubernetes nodes is unreachable? The alert will go to BE then they escalate it to SRE! And so on.

The with this poor flow, backend engineers were overwhelmed with a lot of noise and unrelated alerts! As a result, the on-call first level wasn't efficient at all (which is typical when you have a lot of noise in alerting) and SRE team ended to handel application and infrastructure alerts (so "you build it, you run it" doesn't work anymore!).

So after teams, routes, and escalation policies are done in OpsGenie, now it's time to map that in Prometheus/AlertManager. Each alert has been reviewed and got tags and assigned to a team, so when AlertManager sends the alert to OpsGenie, the alert will go to the right person :-)

Finally, the knowledge share step comes, so all teams are on the same page. I have made an internal tech-talk about enhancing alerting flow and how we made it.

Summary

As you see, most of those steps happened in parallel and an Agile iterative approach. It didn't need much dedicated time, just a good plan. And in the end, it really paid off for the SRE team and the whole company! Having a proper alert flow with incident reports saves time and costs but also increases engineers' productivity and avoids firefighting and on-call burnout.

Once the new setup is in place, the system became much stable and reliable because we were able track the issues and fix them efficiently so no more global downtime nor unnecessary on-calls for months, and teams became more autonomous.

Next steps (if needed!) should be about collecting and monitoring some incident management KPIs.

That's it; now the SRE team can spend more time doing what really matters! And of course, sleep well at night :-)